AMA | Episode 3 - The AI Agent Takeover: Physical AI and Robotics (Featuring Config Intelligence)

Jun 4, 2025

In this AMA, our Marketing Lead Joules Barragan is joined by our Co-founder & CEO Sean Ren and Config Intelligence Co-founder & CEO and an associate professor at KAIST Minjoon Seo. Together, they explore the next frontier for AI agents, bringing them into the physical world. From teaching robots to learn by watching human demonstrations to tackling the massive data bottlenecks in robotics, they discuss how lowering development costs and rethinking data ownership can accelerate the arrival of truly general-purpose machines. Whether you’re curious about bimanual robot training, the role of on-chain provenance in AI, or the path from niche tasks to household companions, this session dives into the breakthroughs, challenges, and ecosystem shifts shaping physical AI.

Link: https://x.com/i/broadcasts/1kvJpyaVrlPxE

Transcript

Joules: Hello everybody. Welcome to our third AMA in our Agent Takeover series. I'm Joules with Sahara AI. I'll be your host today. This is episode three of our AI Agent Takeover series. We've got an exciting AMA today featuring two incredible minds in AI. Our very own Sean Ren, our CEO and co-founder of Sahara.

Sean: Hey guys, I'm back. Excited to chat with some new guests.

Joules: Yeah, speaking of new guests, we have Minjoon Seo. Minjoon is the co-founder and CEO at Config Intelligence and an associate professor at KAIST. He is working on drastically reducing the cost and time of developing bimanual robot models by leveraging human manipulation data. He's also the recipient of the Best Paper Award at NAACL 2025 and the AI2 Lasting Impact Paper Award in 2020. Thank you for joining us today, Minjoon.

Minjoon: Yeah. Thank you for inviting me to this.

Joules: Yeah, anytime. So today's AMA is going to be all about the next big step for AI agents, and we're going to delve into the world of physical AI and how robots are learning from human behavior. If you're listening and you have any questions throughout the AMA, just drop them in the comments and we'll get to them at the very end.

Okay, let's get started. Minjoon, I want to start with you. You spent years studying how language models reason and evaluate themselves. Now you're helping people build robots that basically learn by watching how humans move. Can you talk a little bit about the work you're doing now and what inspired you to bring those two worlds together?

Minjoon: Yeah. So as you introduced me and our company, we are working on how we can drastically reduce the development cost of developing models. So what that means is that if you think about it, nowadays only select companies which have a lot of funding can create the models or create the robots to do the task. And I think that's very unfortunate because of the cost, there are a very small number of things we could do. For instance, let's say you want to automate making hamburgers. It's really hard to do right now because you have to maybe spend a few million dollars or even more than that to create that. But then the market size for itself may not be, in many cases, not as big. And also you may not want to really spend that much money before actually seeing some results.

So we're seeing that the cost of development is the biggest bottleneck of having and creating the ecosystem for robots. And we're basically trying to reduce that. If we can reduce that, we think that the world will see robots around us sooner than later. So that's our biggest mission for us. And in order to do so, the biggest bottleneck right now is in fact getting the right data, because apparently data is the most important thing in AI. And how we can actually get the data more cheaply and also faster for robots, that's really the most important part, at least the biggest bottleneck right now in terms of developing robots. So we're helping our customers with that, how they can get the data more cheaply and faster and still maintain the quality.

I have been working on language models for many years. I think motivation-wise, I thought of AI as not just a language-specific thing. It was more like if we go back to 2009 or 2010, AI was really about including the physical motions. It's being able to do different things with humans. Not just language, not just vision, but actual actions. I think that's how I viewed AI. So I always wanted to create a system that could actually do things that humans are doing, just like robots. But initially, I thought the language has to be solved first or has to be tackled first to get there. And I felt that reasoning is really stemming from language.

But as you all know, we are now seeing a lot of advancements in language and also in vision too. So language and vision are being advanced a lot, in speech too. So I think the ingredients are getting ready to make a more complete AI that can actually do not just the language, but also physical tasks and everything. And I think that was closer to my passion from my earlier days. So I think I'm basically... I now think that we have the technology and the timing is ready to actually work on this. So that's the biggest reason that I have been working on Robots more recently.

Joules: Yeah, that's really cool. Sean, Minjoon brought up a really good point about data and the lack of data to really support a lot of this physical AI development. I know at Sahara AI we're really focused on data. I'm wondering if you have any comments around how important data is to AI development.

Sean: Yeah, I want to go beyond just data. We're talking about robots here, and let it be virtual robots that interact with people on Twitter, trying to help the owner reply and engage with their fans, or we're talking about physical robots that actually sit at your house and do all sorts of daily, repetitive, or creative stuff for you. In either case, I think the fundamental problem here is the robot, the agent, has to be personalized towards your use cases and how you interact with it. Either it becomes a system to you trying to become a co-pilot of your work, increasing your productivity, or it becomes a personal friend that engages with you, entertains you, and makes you feel more fulfilled.

In either case, they need to digest a lot of your internal information that you would externalize to tell the agents, or the agent has to familiarize themselves with your living environments, like who you talk to, what you talked about with these other people, and all of the historical context. I think all this data is highly personal and oftentimes very sensitive. Imagine if Open AI has access to all this data from hundreds of millions of users. There's a huge problem of privacy and there's another huge problem of ownership and potential monetization rights of this data. If you have these two problems, it will become very scary.

If you think about Open AI actually learning all of the personal conversations and the living situations of hundreds of millions of people, and then they can try to come up with another generation of AI that basically understands everyone, like those sci-fi movies that you've seen. So at that stage, it's really concerning to me how humans should feel about their privacy and how humans should feel about their copyrights and control over their personal data. I think this is fundamentally asking about a new paradigm to establish the relationship between the owners of the data and owners of the model or agents, and also the consumers of the data and models and the developers of the models.

So that's the core problem statement for Sahara AI to look into, totally independent of the huge focus on making AI more capable and more competent. I think both are equally important. But apparently these days people are getting much more excited about pushing AI to be much more competent and capable than thinking about the problem of what if one day this AI knows everything from your emails and conversations and text messages, and they're going to do something behind the scenes that you are not even aware of? I think what's driving Sahara AI is to really leverage this. We all believe in this AI-driven future where every one of us, using our agent, can realize our creative ideas and also make every other person more productive and happier. But in that process, if you don't have protections of your AI and if you don't have the transparency of how your data was used in the downstream process, then it's really concerning.

Joules: Yeah, that makes a lot of sense. You made me think of something talking about transparency, provenance, and ownership, especially in this AI-driven future that we're talking about. There's been a lot of talk in the blockchain space for a while now about this future machine-to-machine economy where you can have robots, even just self-driving cars, that'll have their own cryptocurrency wallets. They can earn income by the services they perform, pay for services like filling up on gas, getting their car tuned up, and essentially operate with some degree of autonomy. Do you think that is a realistic future, and if so, what needs to happen, either technically or legally, for us to actually get there?

Minjoon: Yeah, that's actually a really interesting question. As Sean said, there are different kinds of agents, but I think at the end we want everything to be connected. Even for robots, I think there is an abstract layer of agents that operates for planning and reasoning. So I think they're all connected. Basically, what's the timeline for these robots to come to us or actually be around us? Because apparently, we're not seeing them right now, unlike Chat GPT or other AIs. And I think the biggest bottleneck here, in fact, is the fact that there was no ecosystem for robotics data. That's very different from language models or other kinds of AIs. They are, in fact, built on internet data. So those data have come from all the users, right? It's not created by a single user or a single company. Google doesn't own that data. Open AI doesn't own that data. The data is coming from the users. And those users actually have agreed to thankfully share their precious data on the internet for many years—like code, papers, these are the highest quality data that we're talking about. Or even Reddit posts. They just happen to agree to share them on the internet. And many of these companies could leverage this data and train models on it.

So people might think that, "Oh, data is free, and GPUs are not free, humans are not free, so we have to spend money on GPUs and humans." But that's not the case when there is no such ecosystem. For robotics, for instance, there was no such ecosystem. So there isn't data that was created or shared by other people. It's not just that they're not shared; they're not created at all yet because it has to be created through robots, which do not exist at scale yet. So I don't know how this ecosystem will come. I think it will probably need a lot of players and probably will need a company like Sahara to also really play in this kind of area. And of course, we want to be accelerating that process too.

But I think that there will be a lot of companies that will need to be involved here and a lot of participants, a lot of users, to really create this ecosystem. And that ecosystem has to grow so that the amount of data that gets accumulated on the internet or in the world has to surpass a certain amount to have a model that can be trained on it and do something just like Chat GPT for robots. So unlike many other companies in the physical AI domain who think the movement will come really soon, I think that's really difficult because of the lack of ecosystem data. We have to actually create the ecosystem. And those two have to go together to actually get there.

To be more concrete, I think it will take at least five to ten years to actually see really general-purpose robots. But it doesn't mean that we will not see robots around us. I think it'll be very task-specific, and borrowing Sean's words, it will be personalized. I think initially, we need that phase so that we can have more robots and more players in this ecosystem. But then when that ecosystem grows big enough, then I think humanity as a whole, the civilization as a whole, has a chance to create really general-purpose robots.

Sean: Yeah, to add on what Minjoon said, I think I see in the future at least these two types of agents. One type of agent is basically representing individuals, like a proxy that operates in the virtual or physical worlds that does things 24/7 for the owner. And I see another type of agent that is more goal-driven. For example, there are already existing agents in the crypto markets that are deployed by these institutions trying to capture any sort of yield opportunities across different DEXs and exchanges. I think in the future we are already surrounded by agents that are very task-specific, goal-driven.

I was even thinking about those autonomous vacuum machines in my house. They just run through your house, use their camera to take videos of the entire house, and then send it to the centralized servers. So the company probably knows what hundreds of thousands of people's houses look like, and even the pets in the house and everything. So it's scary if you think about it without proper compliance and regulation. But I think it's already happening now.

I guess the biggest concern I have is if these agents make mistakes, who is responsible for those costs? We can even think about autonomous driving cars. They just run on the street. In Los Angeles, the city where I am, there are hundreds of Waymo cars running on the street right now. And they've been doing pretty well. I also know there are people behind the scenes operating those vehicles; they are not entirely autonomous. But one day, they might be pretty autonomous. And if they cause car accidents, who is taking the responsibility for that? I think until we figure out those low-level but very high-stake questions, it's really hard to get these agents running. We might have some very low-stake agents running around. Like on Twitter, you see a bunch of bots basically posting stuff. You can say those are low-stake agents because they don't cause people's lives, but it's really also impacting people's moods, to be honest. I just want to give a bunch of examples so people know the nuances in this question.

Joules: So we've been talking a lot about data. Minjoon, you recently worked on a method for training robots using human demonstration videos, right? I believe you even proposed a method for learning from large-scale, unannotated, or even weakly labeled human demonstration videos. Can you talk a little bit about that? What's the biggest breakthrough that made that possible?

Minjoon: Yeah, so it's the work we actually collaborated with Nvidia and Microsoft last year. The work's name is actually Latent Action Pre-training (LAPA). That work was mostly focused on how we can utilize human data. We want to train robots from human demonstrations, but traditionally that was very difficult because human demonstrations have, of course, the input, which is videos, but they don't have the output, which is the exact coordinates of the human hands. And even then, human hands and robot hands are different, so they don't really translate easily. That was the bottleneck.

So the technology was really about how we can utilize human data for training robots. And we were able to actually show that the human data could be as effective as the robot data. That's very meaningful because getting human data is much easier than getting robot data. If you want to get robot data, you need to have robots, and not many people have robots. But what's really important, we thought, is that the IP in physical labor is really... let's say you are a carpenter. It's the physical knowledge that is embodied in your data. Your demonstration of doing something is actually your IP too, in some sense, although it's really hard to patent that or get paid for it. But it's really important knowledge. And basically, that's what we thought humans could create. But it's really hard to utilize in its raw form because it's human data.

So what we wanted to do was, can we connect that? Can the robots utilize human data so that they can utilize the demonstrations more easily? And if we could do that, then what that could mean in the long run is that we could utilize more and more data that people produce themselves, making value out of it. We were able to show in the early results that it is as good as robot data. So our company is expanding on that. How can we, in fact, utilize human data more easily, more accurately? We really think that's a really important part of making this advancement.

Joules: Well, that's really cool, Sean. I know we're working on a lot of different ways to make it easier for developers to get the data they need as well, especially when it comes to really bespoke data, such as some of the stuff that Minjoon is talking about. Do you want to talk a little bit about that and what we're working on?

Sean: Yeah. I really like the work that Minjoon just described. To give everyone a little bit more context, Sahara AI has this Data Services Platform, which is a decentralized platform for people to come in, help browse what potential data projects the demand side or other users are looking for, and then try to work on different types of data collection while also becoming part of the co-owner of the datasets. So you get both the ownership of the dataset down the road as well as rewards, incentives, and direct payment for the efforts you spend on the dataset. It's really a hybrid outcome compared to the old-school models such as what Scale AI does, which is to give you a flat-rate payment for what you have done for the dataset.

So this is really an innovative way of incentivizing people to come and leverage their expertise to help collect and label data. And I think there's a very organic connection to the robotics data that Minjoon just described. I was just off a lunch meeting with one of my USC professor friends today, and he was describing pretty much the same challenges. These companies like Google, Nvidia are doing a lot of effort on collecting robot data. They buy hundreds of these little robots, and these little robots have to be hand-by-hand monitored and operationalized by real humans in very controlled lab experiments, like manufacturing of these robots. And then they record videos of those robots, and those data are the most high-quality and usable data.

But apparently, scale is the problem. You can only work on this amount of videos per day with hundreds of robots, and it's going to be really cost-inefficient to throw hundreds of thousands of robots into real life and let amateurs play with them. So that's why I think what Minjoon described is the future. If we have this breakthrough of getting equally quality data from just a human egocentric view of what they are doing—you're cooking in a kitchen, and we see how your hands are operating the knife and all these ingredients and making an omelet. If that data becomes as useful as a robot doing the same thing in terms of training the robot to mimic or imitate the stuff, then that's a huge, huge breakthrough for the whole robot learning community.

If that technology is ready, I think what Sahara's Data Services Platform could bring is that we can connect that technology of collecting human egocentric data to hundreds of thousands of our data service providers on the platform. That might be as simple as every person taking their cell phone, maybe putting it on a specific device on top of their forehead, and then they give you the egocentric video clips of you doing anything. Even grooming your pet. And this will basically give us millions of hours of human operational data to feed into the robot learning process. And I don't know how far we are. Minjoon, you mentioned this is like 5 to 10 years. I hope it's sooner, but I feel like we are very ready to embrace that technology and enable the next generation of robot learning.

Minjoon: Yeah, I think as you said, if the ecosystem can be created faster than what I said, I think it could definitely come earlier, and I hope it actually does. I think it could be much shorter if the ecosystem can be created really fast.

Joules: Yeah, that's amazing. So obviously, you haven't always been in physical AI. I'm wondering how your past research has really shaped how you think about this physical AI world that you're in now.

Minjoon: Yeah. So I think one of the biggest lessons, or I would say epiphany, that I had as a researcher in the language and vision field was that how the AI is trained is really data-dependent. And although I think everyone knows that, of course, models are data-dependent, I think it's not just data-dependent. The model's entire function is defined by the data. That's quite different from the traditional machine learning or traditional AI philosophy where, of course, data is important, but in many cases, as important are things like the architecture of the model. But how I view it is that the architecture of the model is rather a container. It's not really shaping how the model behaves or how good the model is. The container has to be big enough to contain enough information, but it's not like it's really shaping anything. Data is really defining how the model works.

If we believe in the 100% data-driven model, then what that means is that whether it's just language models or even physical AI, whatever the AI is we're trying to achieve, what really matters is how you define the input and outputs and whether you have a lot of them. High quality input-output. In this case, high quality really means that the data you have is really covering the type of input that you will actually give to it. And also, is the output what you're really desiring? So is the output really corresponding well to the input?

It's really about having the quality, and if we agree with that, then even tackling the physical AI problem, although people may think it's a different problem than language models, it's really similar to, or I would say the same as, language models. You have to have a lot of input-output pairs for physical AI. What are the inputs and what are the outputs? Here the inputs are vision and language. Vision needs to see what's going on, and language is the instruction. Then the output will be exactly the action. The action can also be defined as a sequence of positions, right? Because if you want to define the actions, it's really about where your hand is or where the robot hand is.

Then if you can just define that in the input-output relationship, then physical AI can be trained with just the data and as little human intervention as possible. And if that's possible, then the focus has to be always on the data. That's what I learned from my earlier days in language and vision research: it's really agnostic to the problem we're trying to solve. It's really about how we define input and output and whether we have enough data to actually model that.

Joules: Cool. Excellent. We are running pretty close on time, so I want to make sure we have time for questions. I just have a few more questions for you, Minjoon and Sean. So if someone wanted to get started in physical AI, what advice would you give them?

Minjoon: I think it really depends on which part of physical AI they're trying to do. For us, for instance, we're not right now, at least, developing our own robot hardware. We're using other people's hardware because developing these robots costs a lot. So you have to have a lot of capital, and you have to have a lot of expertise in how hardware really works. When we say physical AI, there are a lot of components to it. So I think the first question is which part of the physical AI we're trying to really focus on.

What we're trying to do is mostly on the software layer, but it doesn't mean that we don't need knowledge of hardware because we're using hardware a lot. So if someone's starting in physical AI, it's also a very good lesson for me too because, you know, at some point, I was also starting in physical AI. The important thing is that we need to be careful about the lead time of many ingredients. It's very different from software-only industries, because in the software-only industries that most AI people are used to, they can get things very fast because they just have to download it or it's on the web.

But when hardware gets involved, then you need to order it, you need to manufacture it, you need to create it. And there is a lead time for that. That lead time, when it accumulates, becomes so long. For instance, if you want to do something, you have a really great idea, and if that's a software-only project, then you can start right away. But in physical AI, if you have a great idea, you have to actually order different parts, and that will come maybe in a month or two. And then you start developing on them, and then you realize that, oh, one of the parts actually needs a design change. Then you will need to spend another one or two months to actually get the new parts. So the time will just fly if we're not careful.

I think especially for people who are mostly on the software side and are starting physical AI, that was the biggest lesson I had personally because I was also coming from mostly the software side. So that's one thing.

But if people are coming from the more hardware side and trying to do AI, I think the biggest thing that is the most important is to distinguish between traditional approaches to robotics and more AI-driven or data-driven robotics. I think it's very similar to how linguists have approached the language problem. I was also very linguistics-driven. But that basically means that, "oh, if we want to create good language models, then we need to have a really good knowledge of language itself." And I think that's not true these days. We don't need to know what a verb is or what a pronoun is to build a language model. We just need to have a data-driven method, just good data.

And the same lesson applies to robotics. The question is: do we need to know everything, every part of robotics to actually build a good model? It will definitely help, but I don't think we should rely on that. If we want to do it the data-driven way, we may want to be careful about being too domain-specific, which usually leads developers to try to invent the method. But I think what's really important is not inventing the method; it's actually teaching the model. It's very different. Is it invention or is it teaching? I honestly think that AI is more about teaching, not invention, in many cases. So that kind of philosophy is really important.

Joules: Cool. Sean, is there anything you want to add to that, as well as how Sahara AI can support builders who are working in this space?

Sean: Yeah, definitely. Data has been the key word in today's discussion and conversation. That's perfectly matched to one of our biggest drives: to really help incentivize users to bootstrap an ecosystem around useful data for AI while also giving them the proper ownership so that they can continuously monetize and benefit from the data.

In the context of physical AI, I hope to see everyone using all sorts of devices they own to create data useful for training robots and physical AI agents, and also to benefit from how that data is monetized down the road by either companies or other individuals. And that's definitely on the roadmap for how the Data Services Platform will support that as part of our application-layer products.

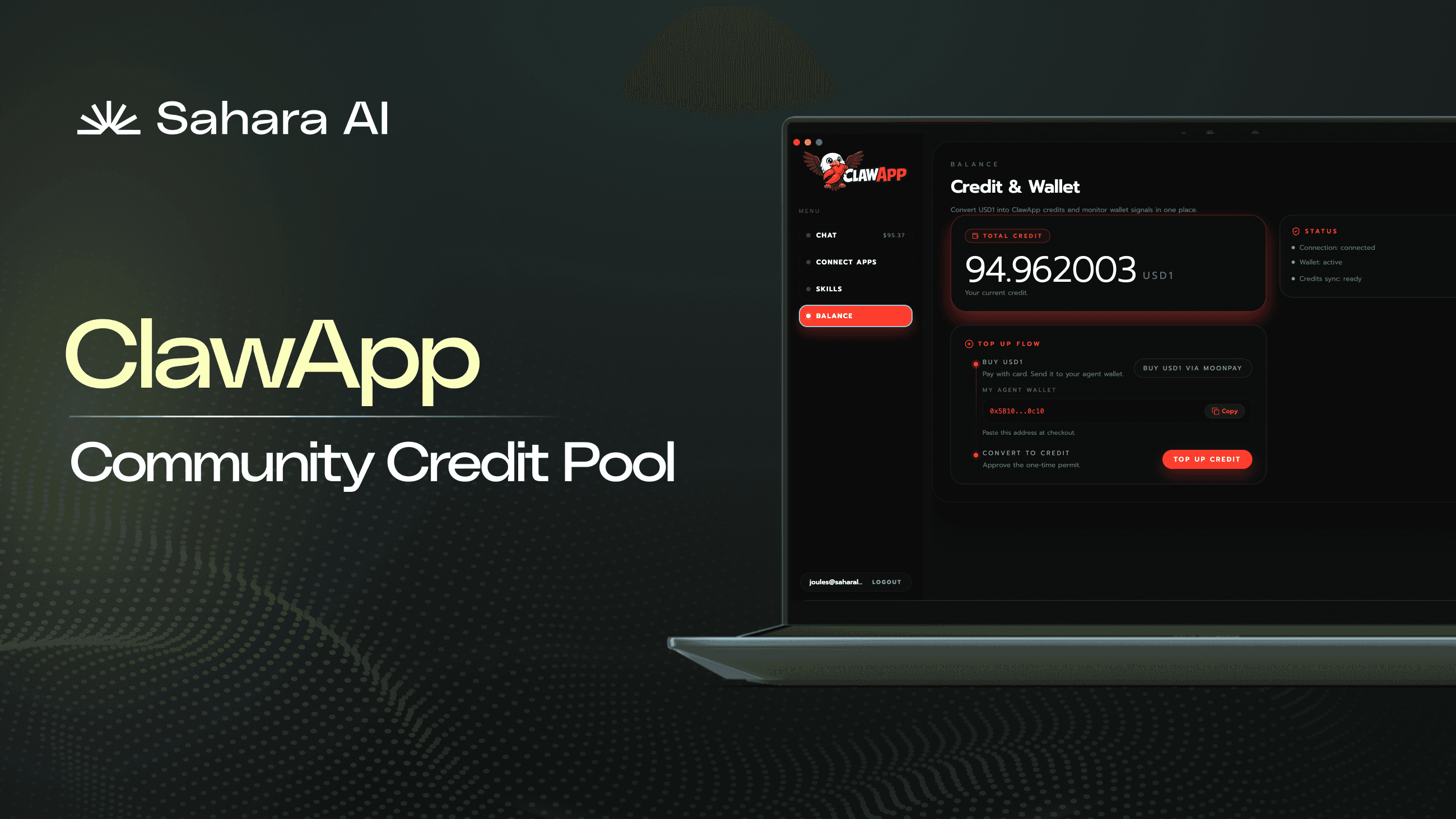

But also, physical AI doesn't mean it doesn't have virtual or software components. We have an upcoming launch for Sahara that everyone should pay attention to, which is around how we can allow people to build agents with a very simple UX/UI while you can also claim ownership of the agents and register the metadata on-chain. That's a hugely important step towards provenance and user-owned AI. If you want a physical AI to be a personal AI for you, it needs a virtual heart that understands who you are. And that's where these agent builders come into play, with the memories and the access to the databases of the personal users. So yeah, stay tuned. We're going to have more to come about that agent builder and registration process.

Joules: Awesome, thank you, Sean. We have a few questions from the audience. One question: With more powerful agents comes more responsibility. What do you think researchers and builders should be careful about when designing AI that's actually interacting with the real world?

Minjoon: Yeah, that's a really great question. It's really about connecting the agents that are just in the virtual world to the physical world. When agents on software have that interface to interact with the physical world, it means we're also more exposed to potential risk. Because it's not just about software, right? They can have robots that can do different harm or different things that we did not intend. So just like with language models, we need to be careful about malfunctioning, but the bar becomes higher.

That's probably the biggest bottleneck of developing these models because we don't want any mistake, especially a mistake that can hurt someone. So that's also why we may see robots first... I mean, there are different opinions: are we going to see robots first at homes or in factories? Of course, factories already have robots, but I'm talking about more humanoid-like robots. And that's a really hard question to answer. But in terms of safety, factories are probably easier because we can have them completely separated from humans so that even if something happens, it doesn't hurt humans. At home, I think we'll probably need much lighter or weaker robots that will not hurt people even if they malfunction. It's kind of similar to a robot vacuum cleaner. It can move around and hit a person, but we're not too concerned about it because the hitting itself isn't really hurting people. I think we need to have robots that, even if they malfunction, don't really hurt anything or create much of a problem. That's probably the first important thing. But once we limit the robots to those restrictions, there will be fewer things we can do with them. So as the robots get safer, I think we'll see more capable robots.

Joules: Another question from the community: What's more important—data, environment, or architecture—when it comes to training agents, especially around physical AI?

Minjoon: I guess the architecture is the model architecture in this case, right? I honestly think that I can't say architecture isn't important in absolute terms. I don't think we would have seen such advancement if we could not have discovered neural networks or the transformer. So architecture is definitely important. But then the question is, do we need a lot of different architectures? And probably the answer is no, because if someone has discovered a really good architecture and if it's open-sourced, then it's very likely that in most cases, we don't have to create new architectures. So that's why the focus goes to data and environments. And as I said, architecture is just more like a container. We need a good container to do things, but that doesn't mean the container itself is capable. It's more that they have a larger, higher upper bound. I really think that data is more important in that sense, once we have a good architecture.

Sean: Yeah, I agree. I think this is like giving the example of thinking about an automobile. Every time a car has an engine upgrade, it's great. The car can run much faster, but you always need gas. And that's basically data. You just have to keep feeding it gas to keep it running. And I think that's true with AI. If you don't give it gas, it's basically useless. It's just parked there, regardless of how good the engine is.

Joules: Yeah, a very valid point. Okay, looking through these questions, what is the most underrated challenge in teaching robots to use both hands? Minjoon.

Minjoon: Underrated challenge. That's a really great question. Because it says "underrated challenge," right? So I think there are some apparent challenges, but if we think about the underrated ones, I think it's really about... how much data we need for these kinds of tasks. That amount of data is much higher than I think many people realize. That's because it's not just about pick-and-place. If we think about pick-and-place, you pick something and then place it somewhere else. We may not need a lot of data to do those kinds of tasks, but humans are actually doing a lot of things with their hands. And the really important thing is that it's not really about the motions. Motions are just the outputs. Just like in language, all the language outputs are just tokens. It's one of the, say, 30,000 words that English has. If you think that way, then why is it complicated? It's just that you have to choose the right one among 30,000 tokens, 30,000 words.

What's really important is what kind of intelligence you need to output that sequence of tokens. And that's exactly what's being learned in the model. The same thing is true for all this data. It's not really about the human motions themselves. Of course, they are important, but we're trying to have the input-output pair so that we need to induce the intelligence needed to map the input to the output. And here, outputs are just positions, yes, but the underlying, latent thing that we're learning is intelligence. To learn that, I think we really need a lot of data. And I think that's a bit underrated because people may think that bimanual data is just about, if you have the shape of a cup, how you should approach it. Of course, that's involved, but it's not just about the interaction itself. It's really about the various reasoning that goes behind accomplishing the physical task. So I think that's the relatively underrated challenge in the field.

Joules: Yeah, really cool. We are at time. Do you two have any last-minute thoughts? Anything you want to shout out or share before we go?

Sean: Yeah, I can quickly... I want to leave the final line to Minjoon. We have a lot of interesting product launches coming up this month, so stay tuned. The one I just talked about is about building and registering agents on-chain, so you have entirely transparent and auditable ownership down the road. I'm personally very excited about that big step. Minjoon?

Minjoon: Yeah. I think I have probably two last points. One is, as Sean said, the data that everyone produces—you're not tracking it because you're not filming it right now, but everyone produces every day, doing their daily jobs. Whether you're pouring milk or cooking or doing something with your hands, I think they will be very valuable. And it's just that the value is not being captured. So I think that's a really important thing in the future. That, of course, requires a lot of layers. It's not just about technology, but it also requires an ecosystem and a community. So I hope that our company will be able to support the technology part, the underlying technology part, and I hope that Sahara can also be the provider for the community part, the ecosystem. And about our news, just like Sean said, our company is preparing the MVP. We're still in stealth mode, so we don't have much revealed yet on our website. But we're trying to launch our products, which is a platform people can use to develop their own robot models themselves without much difficulty, in Q3 this year. So I hope to share that great news soon and hope to stay in touch.

Joules: Excellent. Thank you. Big thanks to Minjoon for coming and to Sean Ren for also helping host today. If our conversation sparked any new ideas, be sure to check out our social channels for the latest updates. Follow Sahara AI, follow Minjoon, and we'll see you next time. Thank you, everybody.