Что такое аннотирование данных ИИ?

8 сент. 2025 г.

Каждая AI система — от чат-ботов до автомобилей с автопилотом — обучается на примерах. Но эти примеры не приходят готовыми. Их нужно собирать, очищать и маркировать, прежде чем AI сможет их понять.

Вот почему гонка в AI смещается. Поскольку модели становятся более мощными и специализированными, настоящая конкуренция заключается не в том, кто сможет построить самую большую модель, а в том, кто имеет доступ к лучшим данным.

Так что же делает данные «хорошими»? Каковы лучшие практики их сбора, маркировки и подготовки для машинного обучения?

Этот справочник разбирает развивающийся мир аннотации данных, маркировки данных и AI-сервисов данных — фундамента, который определяет, насколько точным, справедливым и полезным может быть современный AI.

Краткая история аннотации данных

Когда AI системы впервые начали учиться на данных, аннотирование было простой задачей. Ранние проекты компьютерного зрения полагались на базовые ограничительные рамки, рисуя rectangles вокруг котов и собак, чтобы алгоритмы могли учиться различать их.

За последнее десятилетие, когда AI переместился из исследовательских лабораторий в реальные приложения, аннотирование стало экспоненциально более сложным. Моделям больше не нужно просто знать что такое объект — они должны понимать как он себя ведет, почему он имеет значение, и в каком контексте он появляется.

Современные рабочие процессы аннотирования включают:

Семантическая сегментация, чтобы обозначить каждый пиксель на изображении

Темпоральная маркировка для видеокадров

Маркировка намерения и тональности для разговорного AI

Мультимодальная аннотация, сочетание текста, аудио и визуалов

И поскольку такие модели, как GPT-4 и Claude, показывают способности к рассуждению, близкие к человеческим, аннотирование данных эволюционирует от механического процесса к интенсивной дисциплине знания. Многие компании теперь полагаются на AI-сервисы данных, которые соединяют передовые инструменты с специализированным человеческим контролем для обеспечения точности и соблюдения норм на масштабах.

Что такое аннотация данных (и чем она отличается от маркировки данных)?

Аннотация данных — это процесс добавления метаданных, контекста или меток к сырым данным, чтобы машины могли их интерпретировать. Маркировка данных, хотя часто используется взаимозаменяемо, обычно относится к более узкому акту присвоения тегов или категорий (например, «спам» по сравнению с «не спам»).

Оба критически важны для обучения с учителем, где модели учатся на примерах, чтобы делать предсказания.

Примеры аннотации данных в реальном мире

Автономные транспортные средства: маркировка дорожных знаков, полос и пешеходов

Голосовые помощники: маркировка звуковых клипов для акцента и намерения

Чат-боты: классификация текста по эмоциям и генерации ответов

Как работает процесс аннотации данных

Каждый проект AI начинается с одной и той же основы: данные. Превращение этих данных в пригодный учебный материал требует нескольких ключевых шагов. Эти шаги могут быть выполнены внутри компании или предоставлены через поставщика полного спектра AI-сервисов данных.

Сбор данных: сбор сырых данных с помощью камер, API, датчиков или корпоративных систем.

Очистка данных: удаление дубликатов, исправление проблем с форматированием и обеспечение последовательности.

Аннотация / маркировка: добавление тегов или метаданных для выявления паттернов и взаимосвязей.

Контроль качества: проверка, что аннотации точны и последовательны среди аннотаторов.

Обучение и итерация: подача данных в модели, оценка производительности и уточнение меток по мере необходимости.

Иногда у организаций уже есть богатые наборы данных (например, внутренние видео или транскрипции клиентов), но они неструктурированные. В этих случаях аннотирование становится мостом, который преобразует существующие активы в ресурсы, готовые к AI.

Человеческая, автоматизированная и гибридная аннотация

Тип | Описание | Лучше всего для |

Человеческая аннотация | Квалифицированные андаторы вручную просматривают и маркируют данные. Медленнее, но крайне точно и совершенно необходимо для тонкой или специализированной работы. | Медицинская визуализация, финансы, юридические документы |

AI-ассистированная аннотация | Предобученные модели генерируют метки автоматически. Быстро и эффективно для больших, повторяющихся наборов данных. | Классификация изображений, категоризация текста |

Человек в процессе (гибрид) | Сочетает автоматизацию AI с человеческим контролем и обратной связью. | Большинство корпоративных AI-пайплайнов |

Рост экспертов в области аннотации данных — "Учителя AI"

В начале каждый мог маркировать данные — глобальная рабочая сила общих андаторов маркировала изображения или предложения за копейки за задачу. Но поскольку AI переместился в специализированные области, такие как здравоохранение, финансы и образование, эта модель общих специалистов начала ломаться.

Современные системы AI требуют аннотаций, основанных на экспертизе в области. Невозможно обучить диагностическую модель с андаторами, которые не могут читать медицинские снимки, или создать финансового помощника AI с людьми, которые не понимают банковский язык.

Этот сдвиг видно по всей отрасли. В конце 2024 года xAI отчётно заменил тысячи общих андаторов данных на «учителей AI» — экспертов в области, которые обучают и корректируют модели, используя специализированные знания. Это знак того, в каком направлении движется поле: аннотирование как работа знания, а не как временная работа.

Когда каждая модель может генерировать текст или распознавать изображения, преимущество заключается в том, на чем она обучена: в проприетарных, хорошо аннотированных и специализированных наборах данных, которые захватывают нюансы реального мира. Поэтому компании все больше инвестируют в AI-сервисы данных, чтобы собирать и аннотировать данные, которые конкуренты не могут легко воспроизвести.

Проблемы аннотирования ваших собственных данных

Создание внутреннего аннотирования может показаться привлекательным, но оно связано с реальными компромиссами:

Поиск квалифицированных экспертов: Многие области — медицина, юриспруденция, производство — требуют специалистов, чье время дорого.

Масштабирование без потери качества: Точность, как правило, снижается по мере увеличения объема без строгого контроля качества.

Бремя времени и ресурсов: Аннотирование данных может занимать 60–80% временной шкалы AI проекта.

Инструменты и инфраструктура: Управление платформами аннотирования, обратными связями и контролем версий требует специализированной инженерной поддержки.

Соблюдение и конфиденциальность: Обработка чувствительных или регулируемых данных требует строгого управления и аудиторских трелей.

По этим причинам большинство организаций теперь полагаются на внешние AI-сервисы данных, которые объединяют экспертные знания в области, управление масштабированием рабочей силы и безопасную инфраструктуру.

Типы аннотации данных

Тип данных | Общие задачи | Пример использования |

Аннотация текста | Маркировка тональности, извлечение сущностей, маркировка намерений | Чат-боты, НЛП-помощники |

Аннотация изображений | Ограничительные рамки, сегментация, обозначение местоположений | Автономные автомобили, электронная коммерция |

Аннотация видео | Отслеживание кадров, анализ движения объектов | Робототехника, наблюдение |

Аннотация аудио | Транскрипция, диаризация говорящего, маркировка эмоций | Голосовые помощники, аналитика звонков |

3D / Данные датчиков | LiDAR, картирование глубины, пространственная маркировка | Автомобильная, дроны, AR/VR |

Почему качество аннотации данных имеет значение

Точность AI зависит от данных, на которых он обучается. Плохое аннотирование приводит к предвзятости, смещению модели и ненадежным предсказаниям.

В 2024 году исследование IBM показало, что до 80% задержек AI проектов возникает из-за проблем, связанных с данными — а не архитектурой модели. Аннотирование высокого качества обеспечивает справедливость, прозрачность и производительность, одновременно упрощая соблюдение новых глобальных норм.

Проблемы соблюдения и управления в аннотировании данных AI

Согласно Закону ЕС об AI, системы AI с высоким риском должны документировать происхождение их наборов данных, законные источники и процессы обеспечения качества. Аналогично, модели, используемые в критических приложениях, теперь требуют прослеживаемости и объяснимости в американских и китайских рамках.

Для строителей AI это означает, что метаданные аннотирования (кто какое обозначение сделал, как и когда) должны быть отслеживаемыми и подлитуемыми. Плохая документация может привести к нарушениям регулирования или репутационным потерям.

Современные AI-сервисы данных помогают устранить этот разрыв, предоставляя соответствующие потоки данных, журналы аудита и записи о цепочке поставок, которые соответствуют новым стандартам управления AI.

Ищете AI-сервисы данных для предприятий и стартапов?

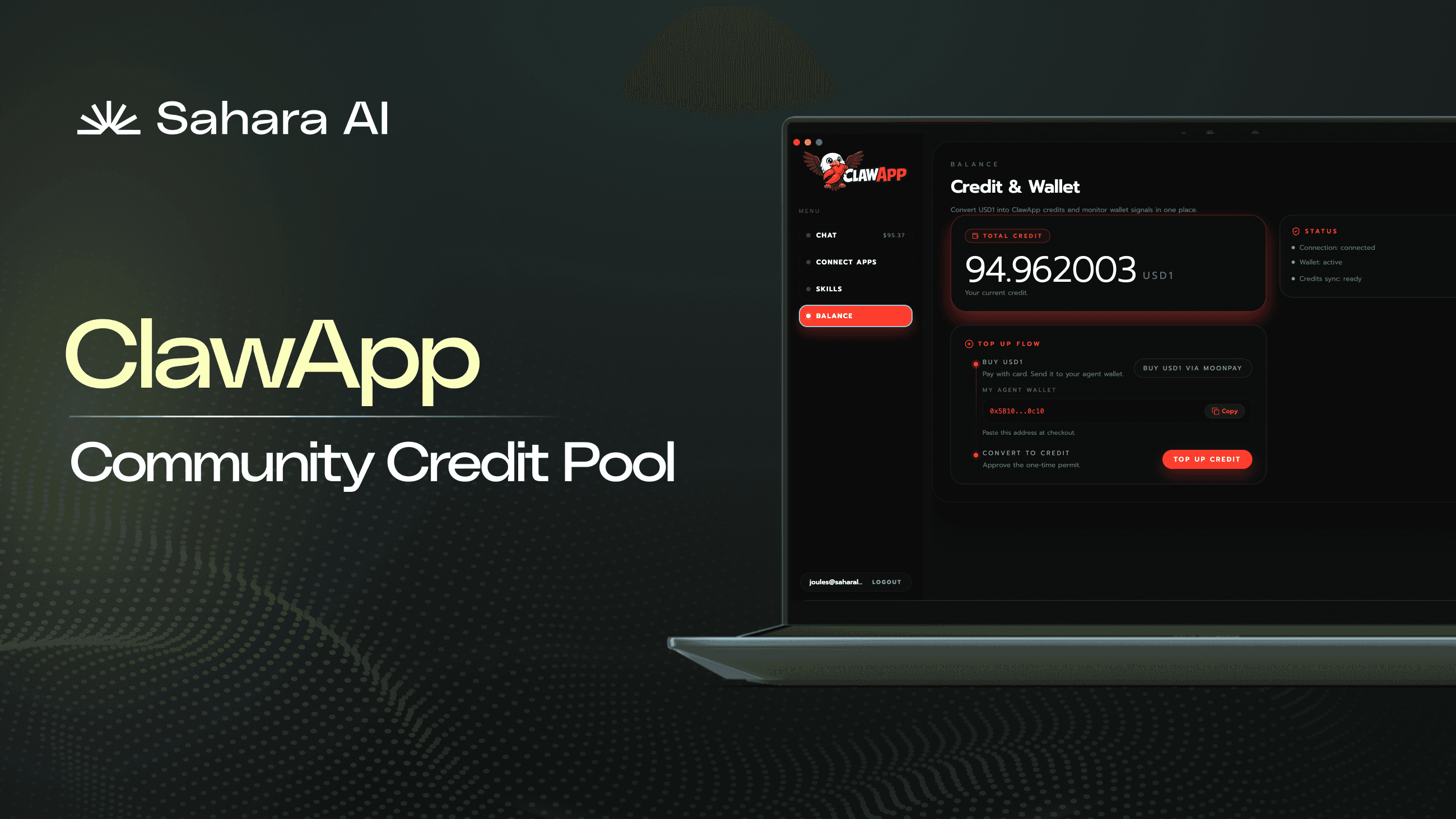

Sahara AI также предлагает AI-сервисы данных, готовые для предприятий, для всех ваших потребностей AI. Узнайте больше о том, как вы можете получить доступ к глобальной рабочей силе по запросу для высококачественных потоков данных, охватывающих сбор, маркировку, обогащение и валидацию здесь.