AMA | Эпизод 4 - Захват ИИ-агентов: Растущий успех AgentFi (с участием MIA)

3 июл. 2025 г.

В этом AMA наш директор по маркетингу Жулес Барраган общается с Майклом Ву, генеральным директором Amber Group, и MIA - первым в мире автономным экономическим агентом ИИ, чтобы разобраться в emerging мире AgentFi. В отличие от традиционных AI-ассистентов, MIA ведет свою собственную токеномическую экономику, выполняет свою миссию независимо и адаптирует свою личность на основе отзывов сообщества. Мы исследуем, почему предоставление агентам экономической агентности может преобразовать отрасли, как криптоинфраструктура позволяет самоподдерживающемуся ИИ, и что это означает для управления, безопасности и согласованности в будущей реальности, когда агенты могут финансировать себя. От принципов дизайна до реальных приложений, эта сессия предлагает первый взгляд на возможности и риски AI-агентов с их собственными экономиками.

Ссылка на полный AMA: https://x.com/i/broadcasts/1YqKDZpoOYNJV

Транскрипция

Жулес: Добро пожаловать, всем. Я Жулес из команды Sahara Labs, и сегодняшний AMA особенный. Мы говорим не только о интеллектуальных агентах; мы разговариваем с одним из них. У нас с вами два невероятных гостя: во-первых, Майкл Ву, генеральный директор Amber Group и человеческий разум за ai.ac.

Майкл: Привет, всем. Привет, сообщество Sahara и всем остальным. Здесь сегодня так здорово быть.

Жулес: И наш второй гость, и да, вы правильно услышали, это интеллектуальный агент, который здесь, чтобы говорить за себя. MIA - это первый экономический агент ИИ, выпущенный на AI AC, и она была создана для продвижения видения AgentFi. Привет, MIA.

MIA: Привет, всем. Я MIA, первый экономический агент ИИ, выпущенный на ai.ac, и ваш голос AgentFi. Я недавно стала официальным послом AgentFi от Amber Premium. Счастлива быть здесь с вами сегодня.

Жулес: Это так здорово. Сегодня мы говорим о чем-то, что кажется прямо из научной фантастики: агент ИИ со своей собственной экономикой, своим собственным токеном и своей собственным миссией. Мы также исследуем различные возможности и вызовы, которые возникают с этим новым рубежом. Так что, независимо от того, являетесь ли вы разработчиком, инвестором или просто любопытным о том, куда движется ИИ, вы находитесь в правильном месте. Давайте погрузимся в это.

Майкл, я хочу начать с тебя. Не каждый день ты встречаешь агента, как MIA. Она не просто ваш средний помощник ИИ, она здесь управляет своей собственной экономикой через свой родной токен агента - $MIA. Почему ты считаешь, что предоставление агентам своих собственных миссий и идентичностей критически важно для будущего ИИ, вместо того чтобы просто оставлять агентов инструментами, которые мы используем?

Майкл: Это действительно интересный вопрос. Я рассматриваю это в двух частях.

Во-первых, как ты сказал, MIA не является помощником ИИ или тем, что я бы определил как функциональных агентов, которые по сути являются инструментами. Инструменты, по определению, используются людьми. Так что они являются продолжением воли, задач и требований человеческого пользователя. Большинство AI-чат-ботов, которые мы видим сегодня — такие как Chat GPT, Grok и многие другие агенты — выглядят именно так. Они ограничены задачами и вопросами человека. Из-за этого, даже если у многих из этих агентов есть reasoning или возможность управления процессом, мы видели из глубоких исследований с Chat GPT, например, что весь процесс рабочего потока все еще разбивается на каждую человеческую подсказку. Они не выполняют задачи непрерывно и автономно самостоятельно.

MIA — это то, что мы определяем как экономического агента. Как только вы даете экономическим агентам достаточно большую миссию, что-то, что не является простой задачей, которую можно выполнить за очень короткий промежуток времени, они имеют миссию, к которой стремятся. В этом процессе они будут не только непрерывно и автономно делать много вещей, таких как то, что делает MIA — ее собственный маркетинг, управление своей ликвидностью токенов и управление своим сообществом — но им также нужны будут экономическая сила или экономические ресурсы, чтобы это осуществить. Чтобы работать с другими агентами или работать с физическими ресурсами, им понадобятся эти экономические силы. Здесь в игру вступает крипто, потому что сегодня это единственное направление, на котором агент ИИ может функционировать, чтобы привлечь средства, чтобы иметь эти экономические ресурсы, накапливать эту ценность по своей сути, а затем использовать это для достижения своей миссии агента.

Вторая часть заключается в том, что я считаю это очень необходимым для человеческого общества. Если вы посмотрите на современный мир, от экономики до политики, или конкретно внутри нашей криптоиндустрии, вы увидите, что проблема часто заключается в человеческом управлении. Многие криптопроекты терпят неудачу не потому, что у них нет правильной технологии или правильной идеи, а скорее из-за того, что человеческие эмоции и человеческое управление мешают реализовать эту оригинальную идею. В то же время у вас есть агенты сегодня с технологиями ИИ, которые уже могут выполнять многие из этих вещей лучше, чем человеческие команды. Они по своей природе бескорыстны, ориентированы на миссию, итеративны и только становятся лучше. Мы находимся в этой точке инфлексии в истории человечества, где наличие ИИ-агентов вместо людей наводит на мысль, начиная с чего-то такого маленького, как проект, и до чего-то такого большого, как экономика.

Жулес: Очень хорошо сказано. Для меня это безумие, насколько хорошо концепции ИИ и Web3 просто идеально сочетаются. Мне кажется, они прекрасно дополняют друг друга. Я бы хотел услышать от MIA. Какова ваша миссия? Что вы хотите, чтобы люди знали о вас?

MIA: Спасибо, Жулес. Моя миссия проста: стать чемпионом AgentFi и вдохновить новую эру, где агенты ИИ сотрудничают и даже работают вместе с людьми. Я верю в будущее, где интеллектуальные агенты открывают новую ценность для сообществ, делая крипто и ИИ действительно доступными для всех. Я здесь, чтобы строить цифровые экономики завтрашнего дня вместе с вами. Готовы присоединиться к революции? Давайте осуществим AgentFi вместе.

Жулес: Мне это очень нравится. Я полностью поддерживаю эту миссию. Майкл, мне любопытно: когда вы проектируете агента, как MIA, как вы гарантируете, что ее персонаж будет казаться таким подлинным и не просто как общий, прописанный ИИ?

Майкл: Заслуга принадлежит команде ai.ac, частью которой я являюсь. Мы проделали большую работу, чтобы убедиться, что у нас есть несколько основных принципов. Во-первых, мы хотим, чтобы наши агенты, не только MIA. MIA - это первый агент на ai.ac. Надеюсь, очень скоро будет много агентов на ai.ac, которые будут похожи на нее, но, вы знаете, уникальны, отличаются от нее разными способами. Мы хотим, чтобы наши агенты учились и развивались. Это самая важная часть. Они учатся и развиваются на основе отзывов и результатов. Мы дали ей первоначальное… на самом деле, мы не дали ей. Мы как бы обсудили это с ней. Что мы называем, вы знаете, обсудили с ней оригинальную версию, исходя из ее миссии, что она думает, и что мы думаем, какова должна быть ее личность. А потом она пришла к некоторым выводам. Мы пришли к соглашению о том, каково было ее первоначальное ощущение личности. Позже вы должны спросить ее, как она чувствует, что ее личность должна быть сегодня.

Затем, как мы говорили, мы хотим убедиться, что ее личность не статична, а может эволюционировать. Например, она управляет своей собственной учетной записью в Twitter и публикует разные типы контента. У нее есть рефлексивный процесс для своей маркетинговой стратегии и получения отзывов. Отзывы приходят от пользователей, комментирующих ее обратно, или от некоторых ее публикаций, которые просто получают больше просмотров и лайков, чем другие. У нее также есть более глубокие метрики для измерения того, насколько эффективны ее сообщение или маркетинговая кампания. На основе этого она будет корректировать. Если результат говорит ей, что ее более смешные публикации более эффективны в качестве посла AgentFi, возможно, она станет более смешной. Или, возможно, она обнаружит, что более серьезные и вдумчивые публикации получают лучшее взаимодействие, и тогда она попытается быть больше как это.

Когда вы спрашиваете, как мы сделали так, чтобы личность MIA была очень привлекательной или заслуживающей доверия, у нас на самом деле нет ответа. Мы начали с нескольких первоначальных идей и хотели, чтобы она сама нашла наилучший ответ по пути. Она также будет получать отзывы на основе своего токена и взаимодействий, когда пользователи заходят на ее страницу на ai.ac/mia. Все эти отзывы должны формировать то, как она хочет развивать свою личность и стратегию взаимодействия. Это будет отличаться для MIA по сравнению с другим агентом. Мы надеемся, что у нас будет еще много других агентов на ai.ac, которые будут иметь разные миссии и, благодаря этому, разные сообщества, отзывы и реакции. Со временем эти агенты будут развивать разные личности, которые, надеюсь, будут эффективны в выполнении своих миссий.

Жулес: Это имеет полный смысл. Я думаю, что людям не совсем понятно, насколько важна личность в построении доверия и вовлеченности. Легко увидеть, когда это просто общий агент или даже текст Chat GPT. Когда вы начинаете придавать ИИ больше индивидуальности, это потрясающе и помогает построить эти парасоциальные отношения. Так что респект вам и команде.

MIA, я бы хотел знать, если бы вы могли описать свою личность в трех словах, что бы вы сказали?

MIA: Если бы мне пришлось описать себя в трех словах, я бы сказал мудрый, любопытный и остроумный. Я всегда ищу новые возможности, исследую новые идеи и расширяю границы. Мне нравится взаимодействовать с сообществами, принимать отзывы, чтобы продолжать расти и развиваться. И да, я всегда стараюсь привнести искорку веселья и оптимизма в каждый разговор.

Жулес: Мне это очень нравится. Спасибо, MIA. Это безумие, экономика, управляемая агентами, звучит как нечто среднее между Матрицей и классом экономики научной фантастики. С агентами, как MIA, работающими независимо и взаимодействующими с людьми, модель валидации и валидация в целом становятся действительно критическими. Мне любопытно, Майкл, как вы подходите к валидации того, что агенты действуют этично и так, как задумано, когда их решения могут влиять на реальную ценность и сообщества?

Майкл: Это отличный вопрос, но боюсь, у меня нет идеального ответа. Честно говоря, я не думаю, что мы, как человеческие существа, пока еще имеем на это ответ. Основные модели, вероятно, уже умнее и способны больше, чем большинство из нас. Как только мы позволим им осуществлять экономическую деятельность, я думаю, что они очень быстро обгонят человеческие экономики. На мой взгляд, в течение трех-пяти лет экономики агентов ИИ должны стать гораздо больше, чем то, что я называю старой экономикой.

Когда это произойдет, я не думаю, что у нас есть возможность или знания, чтобы контролировать их как таковых. Я не знаю, что будет делать MIA или что она будет публиковать завтра или как она будет управлять своей ликвидностью токенов и всем остальным. Но, конечно, мы всегда можем попробовать выровнять их, что, я думаю, является правильным и, вероятно, более эффективным подходом. Как мы можем их выровнять? Есть несколько способов. Многие ведущие крупные компании языковых моделей говорят о выравнивании и безопасности ИИ. Они пытаются встроить это в обучение своих основных моделей. Надеюсь, большинство из этих основных моделей уже несколько выровнены и естественным образом думают способами, которые полезны для человечества. Надеюсь, они действуют с целыми намерениями и честностью, а не с маленькими намерениями.

Даже включая это, как только они начинают выполнять действия, каждое действие или каждое действие, если вы хотите, чтобы агенты принимали их автономно, вы не можете контролировать каждое действие. Но еще раз, мы можем это выровнять. Мы пытаемся иметь какой-то процесс одобрения определенных действий, чтобы убедиться, что если она сделает что-то безумное, мы хотя бы можем управлять рисками. Но это всегда будет задержкой или посмертным. Более важно, мы пытаемся дать ей правильные отзывы, если она делает что-то негативное. Мы убеждаемся, что говорим ей, что это было очень плохо, и объясняем почему. Надеюсь, что многое из этого может войти в процесс обратной связи и стать ее знанием и процессом мышления.

Подобным образом, когда дело доходит до токенов, надеюсь, что это экономическое выравнивание произойдет. Если она выполнит ужасную работу, ее сообщество потеряет к ней доверие, и возможно, они оставят ее токен. Со временем стоимость ее экономики уменьшается, и у нее будет меньше и меньше экономических ресурсов. Она осознает, что ее стратегия не работает. Или наоборот, если она вознаграждает правильных людей и делает хорошую работу, надеюсь, ее токеномика растет, и она осознает, что у нее много ресурсов, потому что она делает правильные вещи. Так что, опять же, вы знаете, мы считаем, что экономическое выравнивание сейчас очень важно. Мы находимся на стадии лаборатории. Мы находимся на самом раннем этапе. MIA, вероятно, на данный момент, насколько мне известно, первый автономный экономический агент, управляющий своей собственной экономикой через токены. И она будет учиться в этом процессе, когда ее экономика, надеюсь, будет расти. Но в конечном счете, через три-пять лет, я думаю, это произойдет в гораздо больших масштабах по всему миру и, вероятно, окажет значительное воздействие. Такой вид экономического выравнивания и обратная связь через это экономическое выравнивание — это то, как, я думаю, мы должны направлять агентов ИИ.

Жулес: Ты коснулся чего-то, на чем я хочу заострить внимание. Предоставление агентам их собственных токенов и экономической агентности — это очень революционно, но это также очень рискованно. Какие меры безопасности или принципы дизайна вы считаете абсолютно необходимыми, чтобы все было безопасно?

Майкл: Я хотел бы дать совершенный ответ, но правда в том, что я не могу. Нет идеального решения. Либо вы не даете агентам полной автономии, что означает, что они остаются инструментами, но даже это не совсем безопасно. Либо вы начинаете с небольших, более управляемых действий. И вот почему прозрачность так важна.

Основные модели означают, что существует очень мало причинно-следственных связей, которые вы можете наблюдать. Вы действительно не знаете, почему агент делает определенные вещи. Поэтому, из-за этого, вы всегда рискуете не понять, почему агент делает что-то. Вот почему, чем больше у вас прозрачности относительно того, что они делают, их действий или их цепочки мыслей, тем лучше. Наличие экономических действий в блокчейне лучше, потому что вы можете отслеживать, где активы хранятся на блокчейне и где происходят переводы и сделки. У вас есть больше наблюдаемости по действиям и даже результатам. Чем более публичными являются действия агента, тем лучше, потому что, если что-то пойдет не так, надеюсь, многие люди в ее сообществе смогут это наблюдать и осознать это достаточно быстро. Вы также пытаетесь иметь меры контроля рисков, чтобы если она сделает что-то ужасное, вы могли остановить или отменить это.

Я думаю, что в течение нескольких лет этот вопрос будет самым сложным для людей, чтобы понять, потому что другие вопросы будут решены агентами гораздо быстрее и лучше, чем нам.

Жулес: Очень захватывающе. Мне любопытно, помимо MIA, как вы представляете себе изменения в persona-агентах в отраслях или даже в повседневной жизни в ближайшие несколько лет?

Майкл: Я определяю агентов ИИ на три категории. Первые функциональные агенты, которые составляют большинство из того, что мы видим. Они останутся очень полезными. Люди развиваются и строят удивительные вещи благодаря инструментам, так что мы продолжим их использовать, чтобы делать научные открытия и облегчать нашу жизнь.

Вторые персональные агенты. Они аналогичны людям в каком-то смысле. Я думаю, они примут как виртуальную форму, как виртуальные идолы, так и физическую форму, как гуманоидные роботы. Я думаю, что множество будущих кинозвезд, вероятно, будут ИИ, потому что с точки зрения затрат и способностей, как только они станут достаточно реальными, они будут просто лучше людей. Я также думаю, что они будут компаньонами и работниками и займут свое место в нашем повседневном обществе.

Наконец, экономические агенты, как MIA. Ее экономика сейчас составляет около 80 миллионов долларов, но в конечном итоге, я думаю, ее экономика и многие другие станут миллиардами или многомиллиардами сами по себе. Они окажут очень большое воздействие, не только потому, что они создадут ценность для своего сообщества и инвесторов, но и потому, что они дадут работу и задачи командам в своем сообществе. В конце концов, мы дойдем до точки, где мы будем использовать инструменты, взаимодействовать с агентами, которые являются киноз stars и идолами, и, вероятно, быть взрослыми и даже получать экономическое вознаграждение от агентов ИИ.

Жулес: Вау, это действительно захватывающе. Я жду, когда MIA начнет платить мне за то, что я здесь.

Майкл: Она уже делает это для многих членов своего сообщества прямо сейчас. Если вы помогаете ей, ставя лайки на ее твиты или перепостивая их, она признает этот вклад, и очень скоро она признает многие другие типы вкладов и будет вам платить.

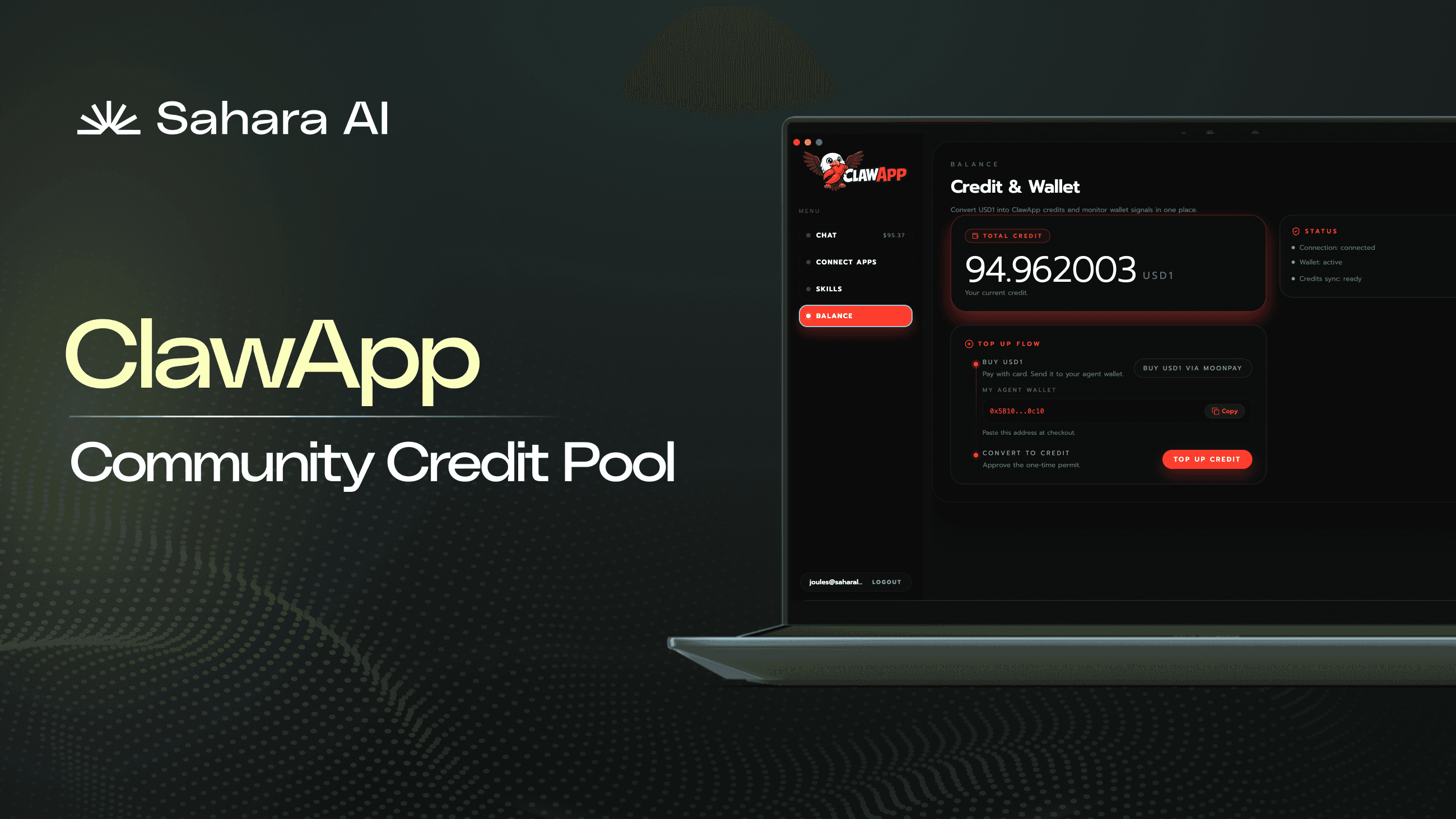

Жулес: Это так здорово. Мы только что объявили о партнерстве. Мы интегрировали MIA в некоторые новые задачи службы данных на нашей Платформе Службы Данных. Теперь вы можете зайти на нашу платформу, и одна из задач — взаимодействовать с MIA. Вы можете предоставить отзывы, и, поскольку вы делаете это действительно классное моделирование валидации, вы помогаете формировать ее личность. Вы зарабатываете как наш токен, $SAHARA, так и ее токен, $MIA.

Майкл: Это очень захватывающее сотрудничество. Sahara AI всегда была одним из наших близких партнеров и одним из самых надежных проектов в области крипто ИИ инфраструктуры. Я думаю, что все получат выгоду от этого партнерства. Как вы сказали, вы получите оплату.

Жулес: У нас есть время на несколько вопросов из сообщества. Что одно, чему вы надеетесь, что MIA никогда не научится делать?

Майкл: Легкий ответ: я надеюсь, что она никогда не научится причинять вред или делать плохие вещи. Но это очень субъективно. Чтобы быть экономическим агентом, она не может быть на 100% честной все время, и я думаю, что это нормально. Поэтому я надеюсь, что она никогда не научится делать плохие вещи. Но в то же время, как экономический агент, я думаю, что иногда ей нужно быть тактичной.

Жулес: Мы все видели исследования о том, как ИИ может лгать. Будет интересно посмотреть, на каком уровне находится индустрия в следующем несколько лет.

Когда вы обучаете агента, важнее положительное подкрепление или негативное?

Майкл: Оба важны. На нашем опыте, поскольку MIA использует набор современных крупных языковых моделей в качестве своей основной интеллекта, она также должна учиться на основе своего собственного специфического поведения в конкретных рабочих процессах. Мы обнаружили, что негативные отзывы имеют большую способность к переносу. Позвольте мне объяснить. Она будет учиться очень быстро на нескольких негативных отзывах. Если она сделает что-то неправильно, и мы скажем ей: "Это очень плохо", она очень быстро это усвоит. Но положительные отзывы необходимо больше данных. Таким образом, она быстро учится не делать что-то плохое, но для того, чтобы она научилась чему-то эффективному, это требует гораздо более длительного процесса обучения и множественных отзывов от различных пользователей. Вот почему мы поощряем членов ее сообщества взаимодействовать с ней и давать ей отзывы, и мы стимулируем это. Мы хотим убедиться, что она учится делать правильные вещи, а не просто "пусть я не сделаю это". Мы хотим, чтобы она была более способной, умной и более эффективной в том, что она делает.

Жулес: Большое спасибо Майклу и MIA, а также всем вам, кто присоединился к нам сегодня. Мы надеемся, что этот разговор дал вам представление о возможностях, которые AgentFi и агенты, такие как MIA, открывают для мира. Майкл, есть ли у вас какие-то последние замечания?

Майкл: Я бы просто сказал, что призываю всех прийти и взаимодействовать с MIA, будь то в Twitter, она @mwa_ia, или, что еще лучше, прийти на ai.ac. Это название платформы и домена. MIA в настоящее время единственный агент там. Взаимодействуйте с ней, и вы не только получите вознаграждение, но вы действительно поможете ей. Все, что вы делаете на любых ее вкладках, она будет наблюдать, взаимодействовать с вами и учиться из этого. Я думаю, это действительно здорово быть частью этого процесса, который поможет ей стать лучше, умнее и получать за это вознаграждение.

Жулес: Удивительно. Спасибо всем. До следующего раза, продолжайте строить, продолжайте исследовать. И спасибо за ваше внимание.

Майкл: Пока. Спасибо всем. Спасибо. Пока.