OpenAI的GPT-5和新开源模型如何将我们更贴近以AI驱动的未来

2025年8月8日

在撒哈拉人工智能,我们坚信未来将由人工智能驱动。随着人工智能发展的每一次突破,我们越来越接近一个人工智能与我们生活的每个方面都日益融合的世界。本周,OpenAI 对行业做出了重大飞跃,发布了三个重要的新版本:GPT-5,这是他们迄今为止最强大的模型,配合两个强大的开源模型——gpt-oss-120b 和 gpt-oss-20b。

这些发布在推动人工智能的进步中迈出了重要的一步,带来了更智能、更强大的系统,能够解决现实世界中的问题,并为开发者、企业和个人开启新的机会。在这篇博客中,我们将详细分析这些发布意味着什么,为什么它们对人工智能的未来很重要,以及我们需要记住什么来确保人工智能对所有人保持开放和公正。

GPT-5 有何不同之处?

GPT-5 不仅仅是“改进版的 GPT-4”——这是一个完全重新构想的系统,知道何时快速思考何时深思熟虑,让它更接近人类思考问题的方式,使其能够即时在简单问题的快速响应和复杂问题的深思熟虑之间切换。这里的技术突破是一个新的统一架构。GPT-5 使用智能路由器,自动决定是使用其快速高效的模式还是使用更深入的推理能力,而不是强迫用户手动选择不同任务使用不同模型。

GPT-5 的另一个重大进步是其整体可靠性。OpenAI 声称 GPT-5 幻觉的概率比 GPT-4 低 45%,在使用思考模式时不太可能比其之前的推理模型出现事实错误,减少了 80%。

这尤其重要,因为现在许多人使用 ChatGPT 等工具,把它们作为获取答案的可靠来源,几乎就像是谷歌的新版本。许多用户信任这些人工智能系统提供的回答,常常在未理解幻觉或不准确概念的情况下就直接接受。

这种更高的推理和可靠性,加上在 GPT-5 的训练和评价方面的明确变化,已导致在特定领域专业知识的显著提升。OpenAI 精炼了训练,以便在医疗保健、编码、数学和多模态理解等针对性基准上表现更好,优化模型不仅用于一般智能,还用于在专门领域中提供一致且上下文感知的表现。

例如,在医疗保健领域,它现在更擅长标记潜在问题,调整其解释以适应患者的知识水平,并引导其提出针对其提供者的正确后续问题。在编码方面,它可以调试更大的代码库,设计更连贯的应用程序,并生成更干净、更一致的输出。这些针对性的改进扩大了 GPT-5 在解决现实世界问题方面的实用性,并为需要能够在高风险、特定领域环境中可靠执行的人工智能的开发者、企业和个人开启了新的机会。

新的强大开源模型

在这里,事情变得更加有趣。与 GPT-5 一起,OpenAI 发布了 两个强大的开源模型:gpt-oss-120b 和 gpt-oss-20b。这些模型是市场上其他领先开源模型的强大竞争者:

gpt-oss-120b 特别令人印象深刻,在推理任务中达到了与 GPT-4o mini 的近似水平,同时优化了效率和规模性能。它拥有 1200 亿个参数,在计算能力和可访问性之间达到了一种平衡,使开发者能够使用强大的模型,而无需构建庞大的基础设施。

gpt-oss-20b 模型在数据隐私和可访问性方面尤其具有颠覆性。它可以完全在本地硬件上运行,这意味着开发者无需担心数据离开他们的场所或依赖云连接。对于在受监管行业或互联网接入有限地区的公司来说,这种本地部署选项是一个重大优势。

随着gpt-oss-120b和gpt-oss-20b的发布,OpenAI 正在进入一个已经由诸如Meta的 LLaMA或DeepSeek-R1等强大模型主导的领域,这些模型已为开源人工智能设置了标准。OpenAI 可能将此视为捕捉快速增长的开源人工智能市场份额的机会。

通过发布这些模型,OpenAI 的目标是扩大其在人工智能生态系统中的足迹. 此举使更多的开发者能够使用高质量的开源替代品,而不必拥有优质模型的条件。越来越多的开发者整合 OpenAI 的模型,使这家公司在更加广泛的人工智能领域中变得更为根深蒂固,定位为开放与优质解决方案的首选提供者。

虽然这些发布为人工智能开发者开启了众多可能性,特别是对于那些无法负担专有高成本模型的开发者,但也战略性地使 OpenAI 加强了其在开源领域的存在。这是一种精心计算的方式,旨在获得市场份额、捕获宝贵的数据,并确保用户在长期内日益依赖 OpenAI 的优质人工智能功能。

嵌入在开源模型本身的安全限制

OpenAI 发布这些开源模型的一个颇具趣味的方面是其安全方法。虽然它们是开放的并可修改,但它们以直接嵌入的拒绝行为打包,默认遵循 OpenAI 的安全政策。这并不是 OpenAI 独有的;大多数领先的开源模型,包括 Meta 的 LLaMA 和 DeepSeek,都有某种形式的保护措施。区别在于这些保护措施的实施方式以及其透明度。

Meta 的 LLaMA 系列将其生成模型与诸如LLaMA Guard 和 Prompt Guard 的独立开放保护模型配对,同时发布了阻止的内容的分类,使安全层模块化并可检查。相比之下,DeepSeek 的保护措施较少,将大部分安全责任留给了部署者。OpenAI 的 gpt-oss 方法处于中间地带:安全界限嵌入在模型本身,通过“指令层次”训练加强以抵抗简单的越狱攻击。OpenAI 甚至进行了对抗微调,以探测在其准备框架下的误用风险。

这引发了一个关键问题:当安全层嵌入在模型权重中时,更难以准确看到哪些被限制、为什么以及限制的始终如一性。缺乏这种可见性,很难审计偏见、越权或意外后果。在广泛使用的模型中,尤其是那些被视为“开放”的模型,问题不是保护措施是否存在,因为这在领先的开源模型中是普遍的,而是它们的透明度和可调整性。

展望未来:人工智能的民主化

我们对这些发展最兴奋的是,它们使我们更接近一个人工智能驱动的未来。我们正在迈向一个这样的世界:人工智能的能力不仅仅是被消耗,而是由一个全球开发者、研究者和创新者的社区积极塑造。

这很重要,因为人类面临的挑战——气候变化、医疗保健、教育、经济发展——需要多样化的视角和本地化的解决方案。能够积极参与人工智能开发的人越多,我们就越可能开发出适合所有人的解决方案,而不仅仅是适用于硅谷的人。

越来越强大的模型,无论是开源还是专有,加上为人工智能开发提供的改善基础设施,使我们正在接近一个拐点。很快,人工智能创新的限制因素将不再是对模型或计算的访问,而是人类的创造力和领域专业知识。

当然,挑战仍然存在。开源人工智能模型提出了关于治理、误用和训练前沿模型所需的计算资源集中等重要问题。但这些是需要解决的挑战,而不是减缓民主化的理由。

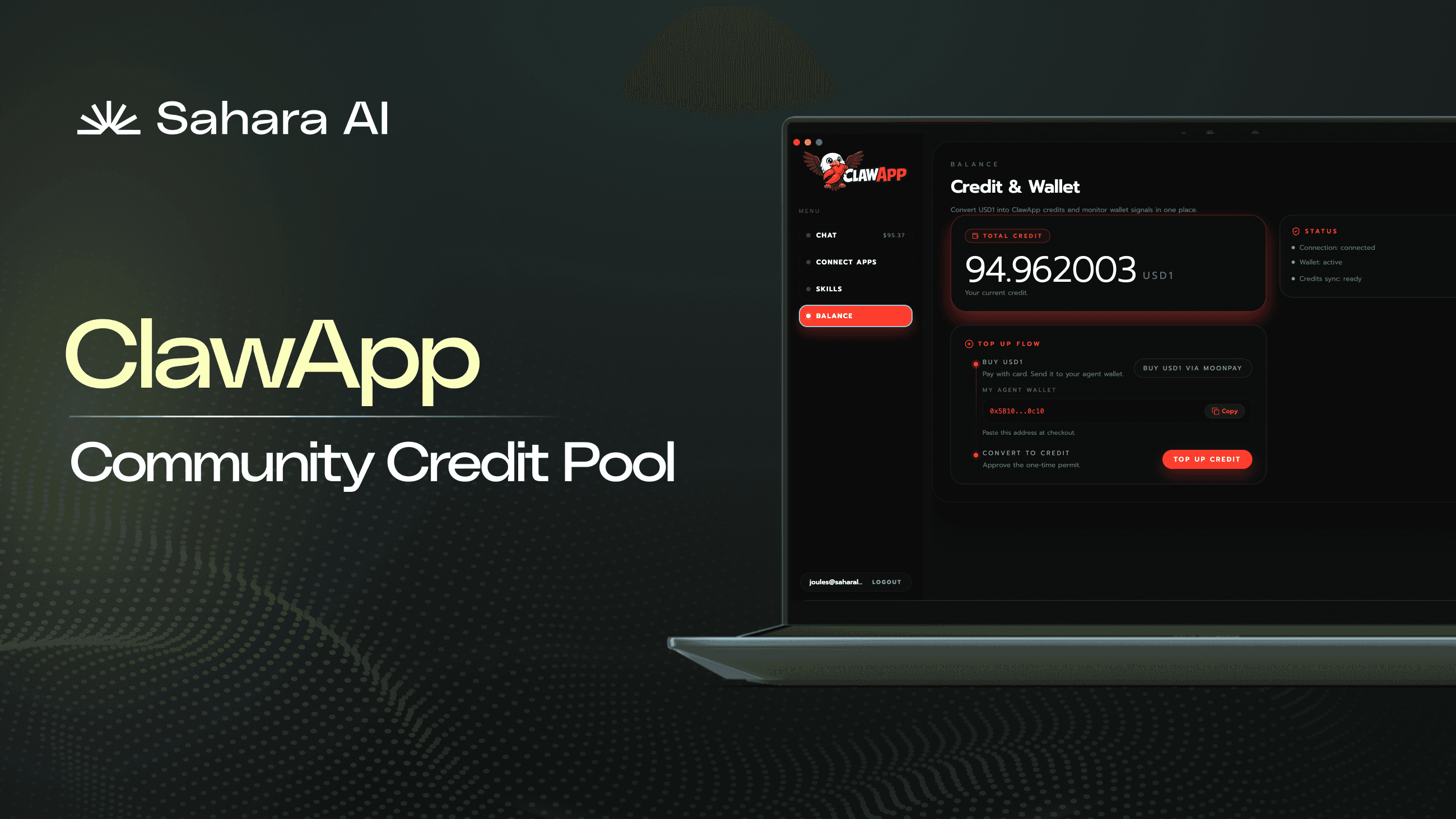

在撒哈拉人工智能,我们认为这一时刻证实了我们的信念,即人工智能的发展应该是开放和可及的。我们正在构建的人工智能基础设施旨在进一步民主化,不仅是对人工智能工具和模型的访问,还有对人工智能系统的培训和治理的参与。

人工智能的未来不仅关乎构建更智能的系统,还关乎构建使我们所有人都更聪明的系统。而这个未来看起来比以往任何时候都更加光明。