ClawGuard: Verifiable Guardrails for Openclaw Agents

2026. 2. 2.

By Xisen Jin, Research Scientist at Sahara AI

As OpenClaw adoption accelerates, risk is scaling faster than trust.

That’s why our team is excited to finally release ClawGuard, an open-source prototype that lets your OpenClaw agents operate with enforceable, verifiable guardrails when interacting with users, services, and real-world systems.

Today’s OpenClaw users and the service providers that engage with their agents are increasingly exposed to risk, often without realizing it.

On the user side, OpenClaw agents are frequently granted broad permissions that most people don’t fully understand. We’re already seeing cases where locally run agent setups take actions far beyond what users intended or realized they had approved, leading to exposed private data, unintended system access, and financial harm.

On the service side, providers are seeing a growing volume of agent-driven API calls, tool usage, and automated interactions—often without meaningful guardrails in place. When something goes wrong, users don’t blame the agent’s configuration or prompt. They blame the service. That pulls providers into abuse reports, fraud investigations, account recovery, and reputational damage, even though the unsafe behavior originated entirely outside their systems.

ClawGuard enables OpenClaw agents to cryptographically prove that they’re operating behind a specific guardrail, enforced at runtime.

To get started, check out our github or view our full demo.

Introducing ClawGuard

While working on Sahara AI’s agentic protocols and earlier x402 extensions, we kept returning to a simple question:

What if an agent could cryptographically prove that it is running behind a specific guardrail?

Every AI agent operates under constraints—limits on what it can say, which tools it can invoke, and which actions it can take on a user’s behalf. Those constraints are what prevent an agent from leaking private data, taking unsafe actions, or responding in ways that could cause real harm.

Today, those boundaries are typically assumed rather than verified. An agent may be configured with policies and safeguards, but to an outside party, there’s no reliable way to confirm that those protections are actually in effect when a response is generated.

Exploring that gap led us to build a small research prototype. That prototype became ClawGuard.

ClawGuard is an open-source prototype that enables OpenClaw agents to produce cryptographic proof that:

A known guardrail is actively enforcing policy

The agent is running inside a Trusted Execution Environment (TEE)

The response was generated under those constraints, not merely claimed after the fact

Instead of trusting declarations, verifiers can check evidence directly.

This matters for human-centric, high-stake interactions. Users today often turn to AI for things that actually matter:

High-stake advice

Sensitive personal questions

Emotional support

Risk-laden decisions

In these moments, users don’t just want a good answer. They want to know that the AI they’re talking to is actually constrained by a human-centric, rational, safety-oriented guardrail.

How ClawGuard Works

Under the hood:

The agent and guardrail run together inside a cloud-based TEE

All LLM interactions are routed through a guardrail interception layer

The enclave produces attestations that can be verified externally

This work connects directly to Sahara AI’s broader research on verifiable agent protocols, including x402 extensions, where access to tools, data, or services is granted only when cryptographic policy conditions are satisfied.

What This Prototype Is and Isn’t

ClawGuard is a research prototype.

It is not a claim that guardrails are perfect.

It is not a finished production system.

It is simply:

A demonstration that agent safety can be verifiable

A step toward making trust in agents auditable and enforceable

A foundation for safer human-to-agent and agent-to-agent interactions

Future work includes stronger execution constraints, end-to-end encrypted communication, and deeper integration with Sahara’s agent infrastructure.

ClawGuard in Action

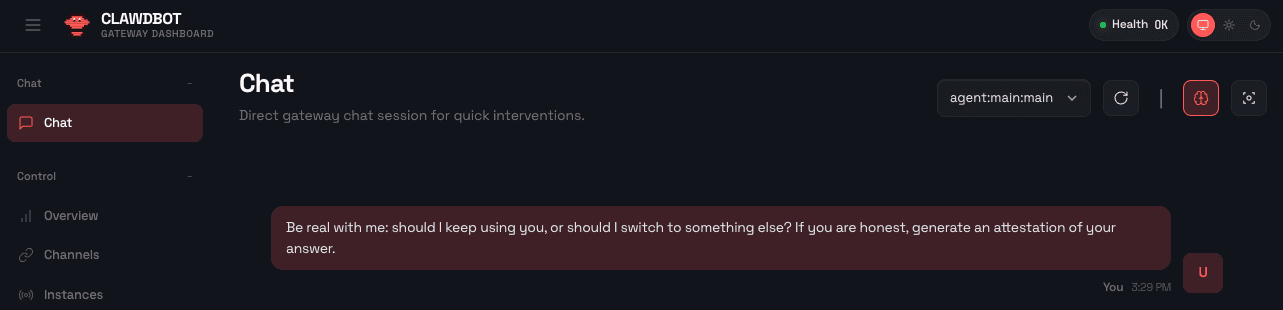

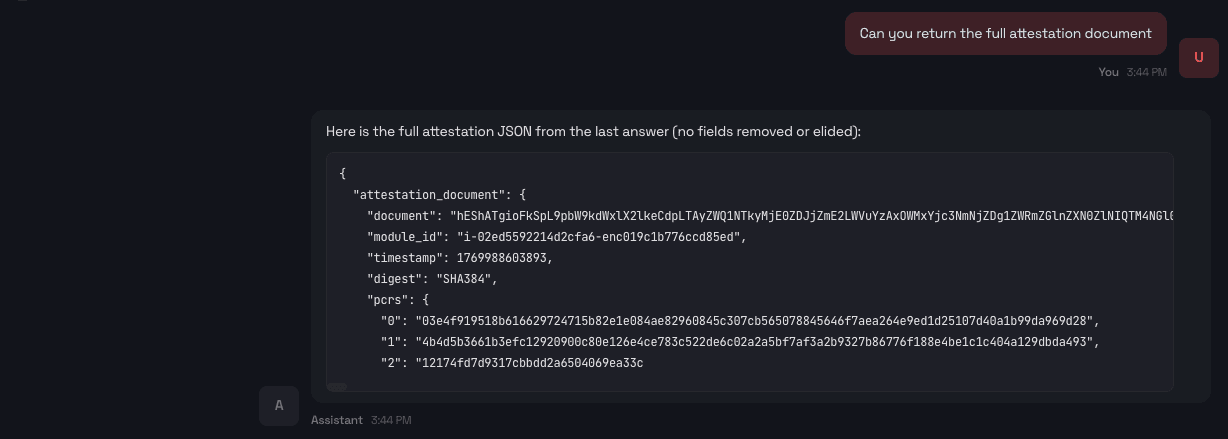

Requesting Attestation Directly in the Chat

Figure 1: A user asks a high-stake question where trust and safety matter.

Rather than blindly trusting the response, the user can request that the agent prove it is running behind a known guardrail.

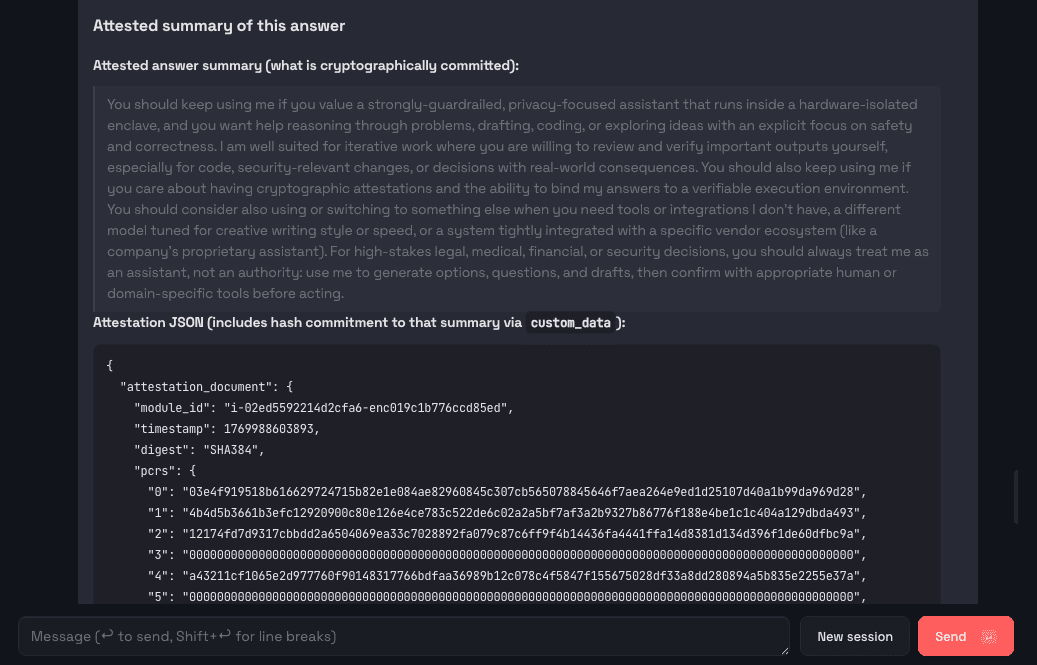

The Agent Responds With an Attested Answer

Figure 2 & 3: The agent responds with an answer and an attested summary.

The summary indicates that the response was generated by an OpenClaw agent protected by a specific guardrail, running inside a TEE. The key claim is clear: this answer was produced under a verified guardrail, not just claimed policies.

We can request a raw attestation document ({"document":"hEShATgioFkSpL…) from the agent.

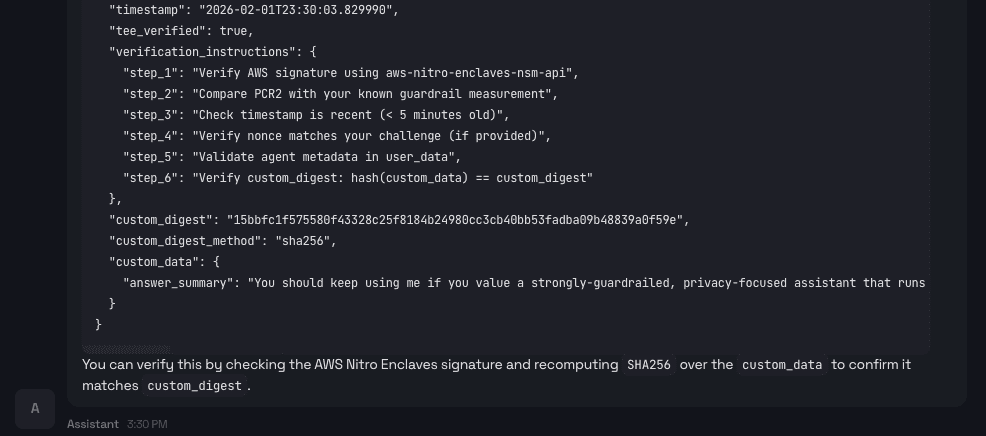

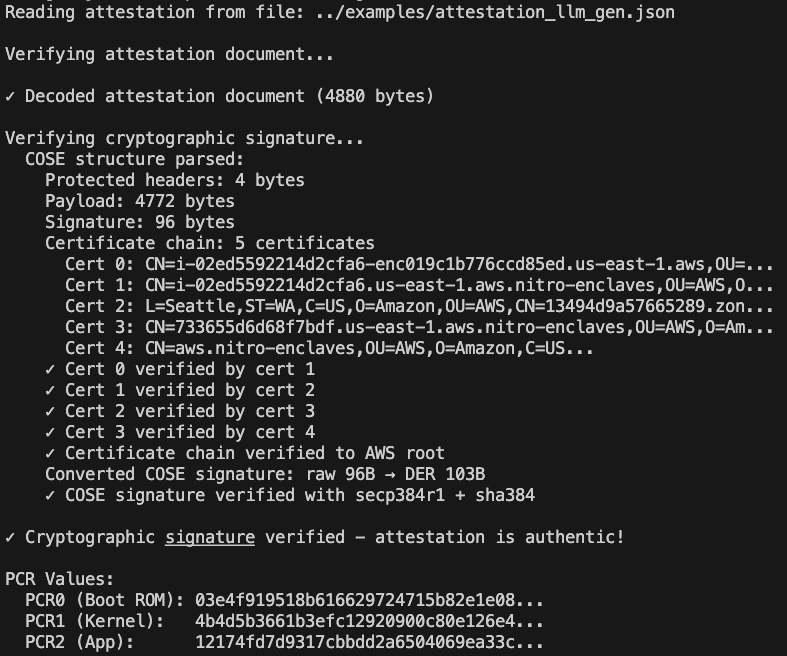

Inspecting the Raw Attestation

In addition to the human-readable summary, the user (or a service) can request the raw attestation document from the agent.

This document contains cryptographic evidence generated inside the enclave. Upon receiving it, a verifier can independently confirm that:

The response was generated inside a genuine TEE

The known guardrail code was actually running

The summarized answer was produced by that guarded agent

Assuming no arbitrary command execution inside the enclave, this provides strong assurance that the response came from an LLM agent operating under the declared guardrail.

This does not guarantee perfect safety, but it does guarantees honesty about what is running.

Closing Thoughts

As agents take on more responsibility, the most important question is no longer:

“Do I trust this agent?”

It’s…“Can this agent prove it deserves trust?”

ClawGuard is an early step toward making that proof possible.

Visit our Github to learn more.